You are viewing the website for the aac-rerc, which was funded by NIDRR from 2008-2013.

For information on the new RERC on AAC, funded by NIDILRR from 2014-2019, please visit rerc-aac.psu.edu.

Face2Face Technologies

Jeff Higginbotham

(SUNY at Buffalo)

Cre Engelke (UCLA)

Michael Williams (ACI)

UltraTec (corporate partner)

Challenge:

Most microcomputer-based AAC technologies are designed for composing text or generating message content. Comparatively few AAC devices provide the specific tools to support face-to-face social interaction. The ability to engage in competent real-time social interactions forms a foundation for social, academic and employment success.

Goals:

This project seeks to create new AAC technologies to conduct social interactions utilizing:

- Recent advances in hardware and software technologies such as digital paper, emotive speech synthesis, gesture recognition, etc.

- Recent social interaction research in AAC

Activities:

Develop technology tools to document interactions, assess technology use, collect information about user goals, values and preferences.

- Prototype technologies that address specific interaction activities

- Integrate successful prototypes and transfer the technology into the commercial sector.

Progress

Develop Technology Tools

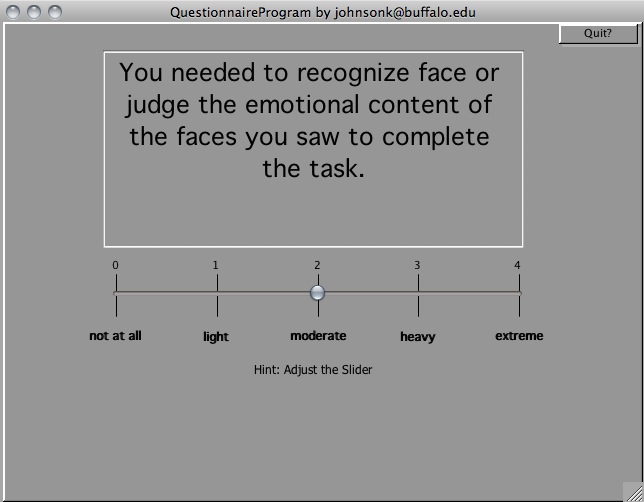

QP - General Purpose Questionnaire program. Using Revolution Studio for development, QP is a software program designed to present the most typical types of questions, e.g. multiple choice, a numerical range, fill in the blank or simple acknowledgement. In addition to the text of a question, the program can also present sound, graphic and video stimuli. QP is available for Windows or Mac and is developed by running a simple script. The QP application, help file, and examples including scripts for the Multiple Resource Questionnaire (Boles, 2007) and the NASA TLX Task-Load Index are available <HERE>.

(example of a PQ questionnaire page)

AAC Usability Toolkit (AAC-UT). The AAC-UT is a flexible tool for describing and evaluating the sufficiency of AAC interfaces as they are being used for communication and device programming. The AAC-UT provides a set of evaluation criteria to help the researcher/designer describe device use problems, and to specify the point in the cognitive action cycle that the problem occurred, probable causes, ramifications of the problem and the types of support present or absent during the specific event.

Currently the AAC-UT evaluation is comprised of:

- Action Assessment (construct and issue utterance, add content to button)

- Action description - A descriptive syntax of user and device actions

- Error stage type (formation of goal, perform action, perceive system state)

- Source of error (no user model, user forgets, too many choices)

- Cost of error recovery (waiting, information loss, program crash, etc)

- Type of feedback (click, insertion of letter or word, written response)

- Ways guided to perform operation (clear mental model, cultural knowledge, professional knowledge, actions must be memorized)

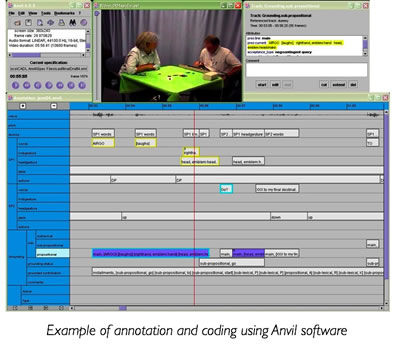

Implemented on an opensource video annotation progam (ELAN), AAC-UT provides the research/designer the ability to specify events in a audio or video file of interest, code and comment upon that event, search for similar events, and output a record of the transcribed video for further analysis or report writing. Please contact Jeff Higginbotham (cdsjeff@buffalo.eduO for AAC-UT Scripts for use with ELAN.

Prototype Technologies

Kindle Project.(Michael Williams, Katrina Fulcher, Jeff Higginbotham). This is the first of several projects designed to evaluate emerging technologies that have application for supporting face to face interaction in AAC. The Kindle eReader was the first technology in a new generation of lightweight electronic information appliances that have application to AAC. it is light, easy to read, long battery life, provides speech output and the content is customizable. Deliverables for this project include:

Indepth review of the the first Kindle by Michael Williams (pdf).

Comparison of the Sony Portable Reader (PRS-700) and the Kindle II by Katrina Fulcher (pdf).

Determining the usefulness of the Kindle as an AAC device by Katrina Fulcher (pdf).

2011 update

Recent work has been focused on developing technologies that take advantage of a communication partners linguistic input. The inTra project has focused on providing developing technologies to leverage the efficacy of partner linguistic input in terms of:

- • text support for AAC users who prefer text-based input

- • discourse and interaction support for communication partner

- • linguistic support for word prediction

- • phrasal elements for selection / manipulation

The picture below depicts the current prototype for providing contextual support to the communication partners, by providing them with a transcription of their speech, coordinate with the AAC user's message.

2012 update

Discourse and Interaction Support: Current work has focused on our project has focused on (1) the analysis of interactions using our prototype device, and (2) the integration of the prototype into a dedicated AAC system (Prentke Romich Echo II) for use in the field.

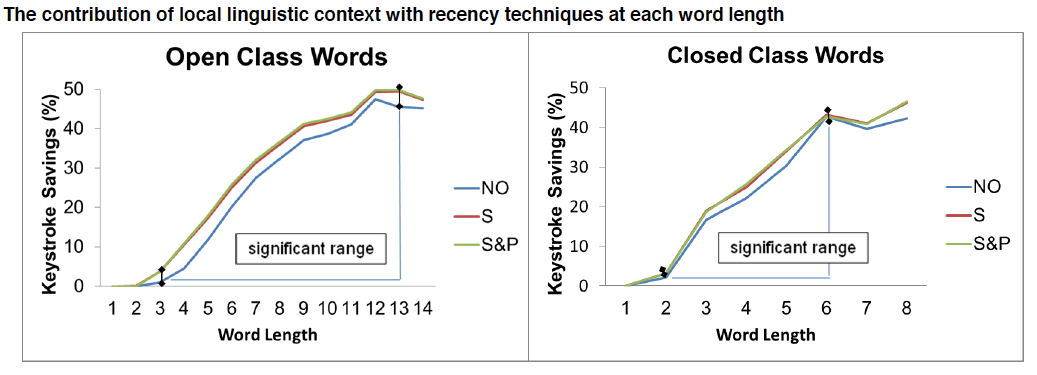

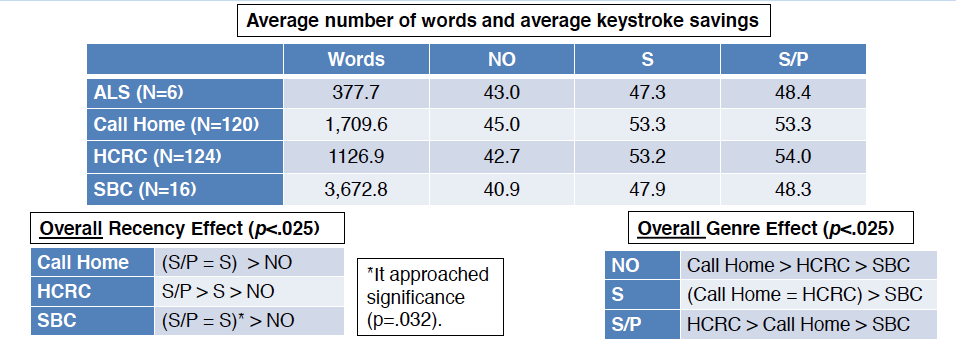

Linguistic Support for Word Prediction: We have just completed a series of analyses examining the effect of partner talk on word prediction at word and discourse levels. Speaking partner talk (S & P) made small but statistically significant contributions to word prediction savings and differed across discourse genre and lingistic context

Work in these areas have been presented at several conferences will appear in a forthcoming publications in Augmentative and Alternative Communication and the Jopurnal of Interactional Research in Communication Disorders (see below).

Knowledge Transfer

Higginbotham, D.J. (February, 2009). When navigating the interaction timestream is your AAC device a kayak, an inner tube or a cement block (tied to your ankle)? Presentation at the Division 12 Augmentative and Alternative Communication Conference, American Speech Language and Hearing Association, Rockville, MD.

Higginbotham, D. J., & Wilkins, D.P. (2009). In-person Interaction in AAC: New perspectives on utterances, multimodality, timing and device design. Perspectives on Augmentative Communication.18, 154-160.

Higginbotham, D.J., Blackstone, S., Hunt-Berg, M. (2009). AAC in the Wild: Exploring and Charting Authentic Social Interactions. Miniseminar presented at the Annual Convention of the American Speech Language Association, New Orleans, November 18th. (handout1, handout2, references)

Higginbotham, D.J. (2010). Humanizing vox artificialis : the role of speech synthesis in augmentative and alternative communication. In J. Mullennix and S. Stern (eds.), Computer synthesized speech technologies: tools for aiding impairment, Hershey: Medical Information Science Reference. (link)

Higginbotham, D.J. (2009). Interacting through technology. Presentation to the International Society for the Analysis of Communication Discourse. New Orleans, November 19th.

Higginbotham, D.J. (2010) Viewing AAC through authentic social interactions. Presentation to the International Conference on Technology for Persons With Disabilities, San Diego, March 25, 2010.

Higginbotham, D.J. Welcome to the Machine: An analysis of co-participant interactions with AAC devices. International Society for the Analysis of Clinical Discourse, Dublin, Ireland, April 14, 2010.

Fulcher, K., Williams, M., & Higginbotham, J. (November, 2010). Use of Electronic Paper Technologies in Face-to-Face Communication. Presentation at the American Speech, Language, and Hearing Association Conference at Philadelphia, PA.

(poster)

Higginbotham, DJ. (2010). Design Meets Disability by Graham Pullin. Book Review, Augmentative and Alternative Communication, 26 (4), pp. 226–229.

Engelke, CR & Higginbotham, D.J. (2012). Looking to speak: On the temporality of misalignment in interaction involving an augmented communicator using eye-gaze technology. Journal of Interactional Research in Communication Disorders, 3 (2), 115–142.

Higginbotham, D.J. & Engelke, C.R. (in press). A Primer for Doing Talk-in-Interaction Research in Augmentative and Alternative Communication. Augmentative and Alternative Communication.

Min, H. & Higginbotham, D.J., Lesher G, & Yik, T. (2012). Exploring the contribution of conversational context in word prediction. Poster presented at the American Speech Language and Hearing Association, Atlanta, November.

Min, H. & Higginbotham, D.J., Lesher G, & Yik, T. (2012). Exploring the text level contribution of conversational context in word prediction. Poster presented at the International Society for Augmentative and Alternative Communication, Pittsburgh, August.

Files

Clinical Pathway for Swallowing for the Person with ALS (8.5x11) (220KB)

AACinTheWild01 (5322KB)

ComWildRefs (64KB)

AACinTheWild02 (4871KB)

MBW Rates the Kindle.pdf (790KB)

.JPG)